Best computer science schools ranked by boba

Many people underestimate the importance of boba when they choose grad school. For those who don't know what boba is ...

I'm Boyuan Chen (陈博远), a research scientist at OpenAI. I am one of the few researchers training GPT image generation. I earned my PhD in EECS from MIT, with a minor in philosophy. My research centers on world models, embodied AI, and reinforcement learning—combining them to make AI systems more capable of understanding and acting in the physical world. I have authored several papers recognized in both industry and academia, including Diffusion Forcing, SpatialVLM, and History Guidance.

I was fortunate to be advised by Prof. Russ Tedrake and Prof. Vincent Sitzmann during my PhD, Prof. Pieter Abbeel during my undergrad, and Dr. Fei Xia during my internship at DeepMind. I completed my bachelor's degree in computer science and mathematics at UC Berkeley, where I also spent a year studying philosophy. Outside of research, I enjoy chess, building robots, and making boba.

I designed it with my friend, Kinsky. We sold it as an education kit to schools. You can ride on it!

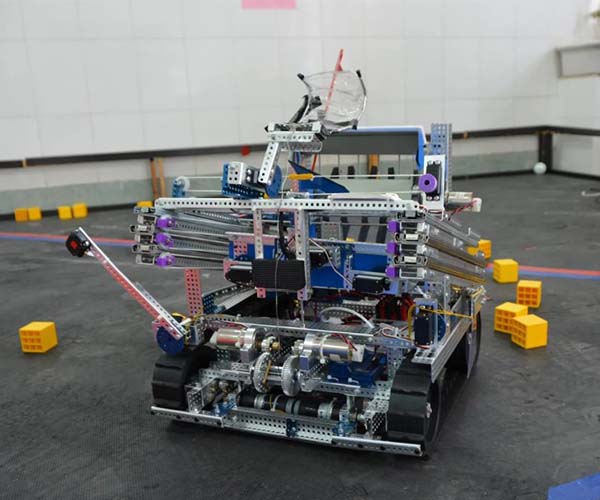

DJI robomaster robot for ICRA AI Challenge. During my undergrad, I was the captain of the team, leading the development of autonomous algorithms in the robot shooting challenge.

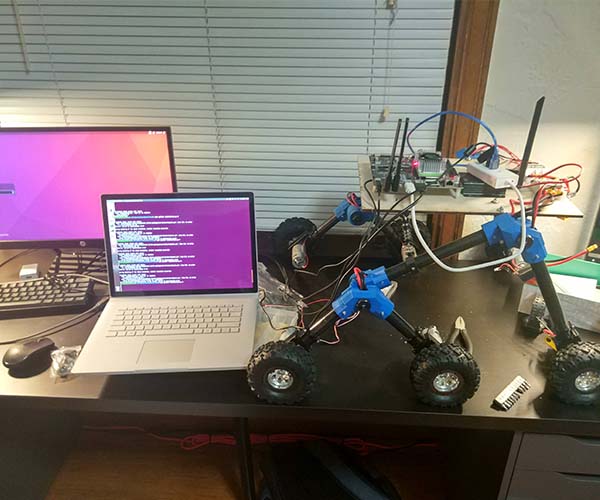

My personal robot that can handle a variety of terrains. I did everything from machanical design, electronics to programming. It uses computer vision to autonomously follow me and avoid obstables.

In 2017, I founded my high school's first FRC team. We didn't have the mentorship nor funding we need, but the team did amazing. I did the majority of the design.

In 2021, I graduated from UC Berkeley, where I spent some amazing time doing research in robotics learning lab.

An autonomous drone which I built and coded. I installed a camera a mini railgun on it to track and aim at the target I select.

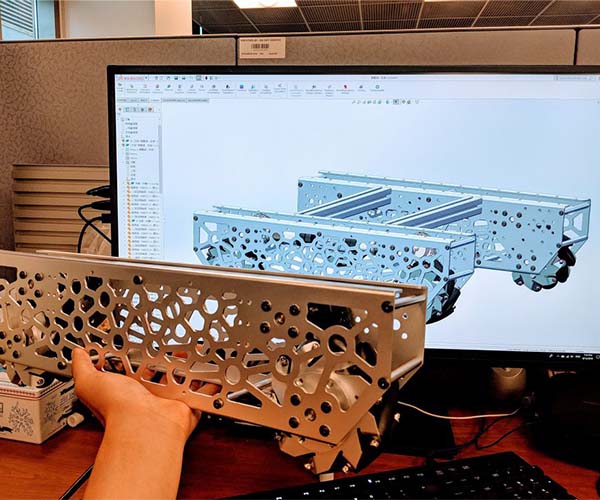

Our FTC competition robot in 2017, when I became the captain of the team. It's my team's first robot designed with CAD. The robot won the east China regional.

In 2016, I participanted in robotics competition for the first time. This is a super cool robot which marks the beginning of my robotics journey.

After my graduation from high school, I continued mentoring the team. My successor Xinpei designed the robot under my mentorship.

In 2019, I was the captain of Berkeley's team in ICRA Robomaster AI Challenge. I co-founded the team and lead 20 student developing autonomous robots.

I became one of the execs at MIT Chess Club in 2022. It was a great time to organize events and hangout with the team!

In 2017, I founded my high school's first FRC team. I worked as both captain and mentor. We won the Rookie All Star Award at CRC 2017.

To celebrate Chinese New Year 2022, I made a big dinner with my friend Maohao Shen at MIT. MITCSSA awarded me the title Master Chef MIT for my Peking duck in their cooking competition.

I cooked 黄焖鸡 during COVID-19 quarantine!

I made Dongporou (东坡肉) during Thanksgiving 2023. The best Soy sauce braised pork I've every made!

Traditional Chinese chicken soup with dried matsutake mushroom.

I cooked 5 dished for 2023 Chinese New Year. All of them are amazing! The dishes are steam eel, egg plant with minced meat, soy sauce braised pork with bamboo shoots, chinese chicken soup with bamboo-mushroom and stir-fried Chinese chives.

I made my roommate and long time friend Haoyuan a bowl of traditional birthday noodle in 2021, when he turned 23.

I cooked beef brisket (土豆炖排骨) in COVID-19 lockdown.

During the COVID-19 pandemic, I tried to make Lamb Croutons following Gordon Ramsay's tutorial.

Tofu stew cooked with various mushrooms and XO sauce. The Umami flavor will burst into your mouse - it's finished in 2 minutes by all my friends.

Many people underestimate the importance of boba when they choose grad school. For those who don't know what boba is ...

以ChatGPT为代表的大模型让我们瞥见了未来的一隅。机器人大模型在过去一年里出现在了几乎每一个机器人公司的PPT里。那么大语言模型的思路会给我们带通用机器人么? ...

ChatGPT has given us a glimpse of the future. So, will the same bring us general-purpose robots? ...